CROHME: Competition on Recognition of Online Handwritten Mathematical Expressions

Datasets -> Datasets List -> Current Page

|

Contents

Contact Author

Harold Mouchère L’UNAM/IRCCyN/Université de Nantes, rue Christian Pauc, 44306 Nantes, France e: harold.mouchere@univ-nantes.fr

License

This work is licensed under a Attribution-NonCommercial-ShareAlike 3.0 Unported (CC BY-NC-SA 3.0) License

Current Version

2.0

Keywords

Online Handwritting, Mathematical Expression Recognition, layout analysis, character recognition, Math retrieval

Description

The dataset provides more than 10,000 expressions handwritten by hundreds of writers from different countries, merging the data sets from 3 CROHME competitions. Writers were asked to copy printed expressions from a corpus of expressions. The corpus has been designed to cover the diversity proposed by the different tasks and choosen from existing Math corpora and from expressions embedded in wikipedia pages. Different devices have been used (different digital pen technologies, white-board input device, tablet with sensible screen) so different scales and resolutions are used. The dataset provides only the on-line signal.

In the last competition CROHME 2013 the test part is completely original and the train part is using 5 existing data sets:

- MathBrush (University of Waterloo),

- HAMEX (University of Nantes),

- MfrDB (Czech Technical University),

- ExpressMatch (University of Sao Paulo),

- the KAIST data set.

Furthermore, 6 participants of the 2012 competition provide their recognized expressions for the 2012 test part. This data allows research on decision fusion or evaluation metrics.

Metadata and Ground Truth Data

The CROHME dataset includes the segmentation, the label and the layout of each mathematical expresion using INKML and MATHML standarts.

The ink corresponding to each expression is stored in an InkML file. An InkML file mainly contains three kinds of information:

- the ink: a set of traces made of points;

- the symbol level ground truth: the segmentation and label information of each symbol of the expression;

- the expression level ground truth: the MathML structure of the expression.

The two levels of ground truth information (at the symbol as well as at the expression level) are entered manually. Furthermore, some general information is added in the file:

- the channels (here, X and Y);

- the writer information (identification, handedness (left/right), age, gender, etc.), if available;

- the LaTeX ground truth (without any reference to the ink and hence, easy to render);

- the unique identification code of the ink (UI), etc.

The InkML format makes references between the digital ink of the expression, its segmentation into symbols and its MathML representation. Thus, the stroke segmentation of a symbol can be linked to its MathML representation.

The recognized expressions are the outputs of the recognition competitors' systems. It uses the same InkML format, but without the ink information (only segmentation, label and MathML structure).

The total size of the dataset is ~130Mb.

Related Tasks

Math Expression Recognition

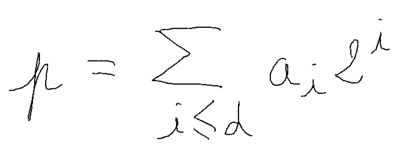

Purpose: The difficulty to recognize math expression depends of the number of different symbols, number of allowed layouts and the used grammar. The competition defines 4 levels (tasks) from 41 symbols to 101 symbols, with increasing difficulties in the grammar of allowed expressions.

Evaluation Protocol: The competition defines the following evaluation protocol:

- participants can use available training dataset (and more)

- the candidate systems take as input an inkml file (without ground-truth) and have to write as output a inkml file with the symbol segmentation, recognition and the expression interpretation with MathML format. This is exactly the same format as the provided training dataset. In 2013, the system can also generate label graph (LG) files.

- the evaluation first converts the inkml files in LG files and then compare the resulting inkml and LG files with the ground-truth with a provided script.

Several aspects are measured. These are:

- ST_Rec: the stroke classification rate, representing the percentage of strokes with the correct symbol,

- SYM_Seg: the symbol segmentation rate, defining the percentage of symbols correctly segmented,

- SYM_Rec; the symbol recognition rate, computing the performance of the symbol classifier when considering only the correct segmented symbols,

- STRUCT: the MathML structure recognition rate, computing the percentage of expressions (MEs) having the correct MathML tree as output irrespective of the symbols attached to its leaves.

- EXP_Rec: the expression recognition rate, which informs the percentage of MEs totally correctly recognized.

- EXP-Rec_1, _2, _3, giving the percentage of MEs recognized with at most 1 error, 2 errors and 3 errors (in terminal symbols or in MathML node tags) given that the tree structure is correct.

- Recall and Precision for segments and recognized segments (symbols)

- Recall and Precision for spatial relations between the symbols

As the MathML struture is not unique for the same expressions, the evaluation tool provides a normalization step to use canonical structures.

References

- Mouchère H., Viard-Gaudin C., Garain U., Kim D. H., Kim J. H., "CROHME2011: Competition on Recognition of Online Handwritten Mathematical Expressions", Proceedings of the 11th International Conference on Document Analysis and Recognition, ICDAR 2011, China (2011) (PDF)

- Mouchère H., Viard-Gaudin C., Garain U., Kim D. H., Kim J. H., "ICFHR 2012 - Competition on Recognition of Online Mathematical Expressions (CROHME2012)", Proceedings of the International Conference on Frontiers in Handwriting Recognition, ICFHR 2012, Italy (2012) (PDF)

Download

Version 2.0

Version 1.0

- The complete CROHME dataset including the evaluation tools (28 MB)

- CROHME sample files including examples of different inkml files and evaluation results produced with the included software (20 KB)

This page is editable only by TC11 Officers .