Difference between revisions of "Benchmarking of Table Structure Recognition Algorithms"

(Created page with 'Datasets -> Current Page {| style="width: 100%" |- | align="right" | {| |- | '''Created: '''2010-04-30 |- | {{Last updated}} |} |} =Description= The aim of this task is…') |

|||

| (6 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | [[Datasets]] -> Current Page | + | [[Datasets]] -> [[Datasets List]] -> Current Page |

{| style="width: 100%" | {| style="width: 100%" | ||

| Line 15: | Line 15: | ||

=Description= | =Description= | ||

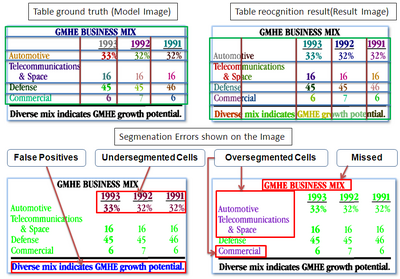

| + | [[Image:Table_UNLV_UW3_Results.png|400px|thumb|right|Example results obtained.]] | ||

| + | |||

The aim of this task is to benchmark different table spotting and table structure recognition algorithms. In the past, several algorithms have been developed for table recognition tasks and evaluated on different datasets [1-4]. Additionally different benchmarking measures have been used in the past by different researchers to evaluate the efficiency of their algorithms. | The aim of this task is to benchmark different table spotting and table structure recognition algorithms. In the past, several algorithms have been developed for table recognition tasks and evaluated on different datasets [1-4]. Additionally different benchmarking measures have been used in the past by different researchers to evaluate the efficiency of their algorithms. | ||

| Line 21: | Line 23: | ||

The competing algorithms need to produce the output in the XML format similar to the [[Table Ground Truth for the UW3 and UNLV datasets|sample ground truth]]. Five example output from our t-recs algorithm are included in the set of samples provided for reference. The output from these algorithms are then used to produce result image files which are then compared by the evaluation framework to produce following benchmarking measures: | The competing algorithms need to produce the output in the XML format similar to the [[Table Ground Truth for the UW3 and UNLV datasets|sample ground truth]]. Five example output from our t-recs algorithm are included in the set of samples provided for reference. The output from these algorithms are then used to produce result image files which are then compared by the evaluation framework to produce following benchmarking measures: | ||

| − | + | # Correct Detections | |

| − | + | # Partial Detections | |

| − | + | # Over Segmentation | |

| − | + | # Under Segmentation | |

| − | + | # Missed | |

| − | + | # False Positives | |

at the following levels of abstraction: | at the following levels of abstraction: | ||

| − | + | # Table | |

| − | + | # Rows | |

| − | + | # Columns | |

| − | + | # Cells | |

| − | + | # Row spanning cells | |

| − | + | # Column spanning cells | |

| − | + | # Row/column spanning cells. | |

The evaluation software provided below (segment_evaluation.sh, part of the T-truth package) expects ground truth images in the "evaluation/gt" folder and result images in the "evaluation/result" folder. The evaluation algorithm produces a file with the extension ".out" in the evaluation directory for each level of abstraction (table, row, column etc) with semicolon separated benchmarking measures in the following order: | The evaluation software provided below (segment_evaluation.sh, part of the T-truth package) expects ground truth images in the "evaluation/gt" folder and result images in the "evaluation/result" folder. The evaluation algorithm produces a file with the extension ".out" in the evaluation directory for each level of abstraction (table, row, column etc) with semicolon separated benchmarking measures in the following order: | ||

| − | + | * gt_img | |

| + | * result_img | ||

| + | * GroundTruthComponents | ||

| + | * SegmentationComponents | ||

| + | * Number of OverSegmentations | ||

| + | * Number of UnderSegmentations | ||

| + | * Number of FalseAlarms | ||

| + | * OverSegmentedComponents | ||

| + | * UnderSegmentedComponents | ||

| + | * False Alarms | ||

| + | * Missed | ||

| + | * Correct | ||

| + | * Partial Matches | ||

These benchmarking measures are described in [5]. | These benchmarking measures are described in [5]. | ||

| Line 49: | Line 63: | ||

* [[Table Ground Truth for the UW3 and UNLV datasets]] | * [[Table Ground Truth for the UW3 and UNLV datasets]] | ||

| − | =Related Software | + | =Related Software= |

* [http://www.iapr-tc11.org/dataset/TableGT_UW3_UNLV/t-truth.tar.gz T-truth Software and Samples] (4.5 Mb) | * [http://www.iapr-tc11.org/dataset/TableGT_UW3_UNLV/t-truth.tar.gz T-truth Software and Samples] (4.5 Mb) | ||

=References= | =References= | ||

| − | # T. Kieninger and A. Dengel | + | # T. Kieninger and A. Dengel, "Applying the T-RECS table recognition system to the business letter domain", Proceedings of the 6th IAPR International Conference on Document Analysis and Recognition, IEEE CS, pp. 518-522, 2001 |

| − | # J. Hu, R. Kashi, D. Lopresti, and G. Wilfong | + | # J. Hu, R. Kashi, D. Lopresti, and G. Wilfong, "Medium-independent table detection", Proceedings of the 8th SPIE Conference on Document Recognition and Retrieval, pp. 291-302, 2000 |

| − | # S. Mandal, S. Chowdhury, A. Das, and B. Chanda | + | # S. Mandal, S. Chowdhury, A. Das, and B. Chanda, "A simple and effective table detection system from document images", International Journal on Document Analysis and Recognition, Vol. 8, Num. 2-3, pp. 172-182, 2006 |

| − | + | # B. Gatos, D. Danatsas, I. Pratikakis, and S. J. Perantonis, "Automatic table detection in document images", Proceedings of the International Conference on Advances in Pattern Recognition, pp. 612-621, 2005 | |

| − | # B. Gatos, D. Danatsas, I. Pratikakis, and S. J. Perantonis | ||

# Asif Shahab, Faisal Shafait, Thomas Kieninger and Andreas Dengel, "An Open Approach towards the benchmarking of table structure recognition systems", Proceedings of DAS’10, pp. 113-120, June 9-11, 2010, Boston, MA, USA | # Asif Shahab, Faisal Shafait, Thomas Kieninger and Andreas Dengel, "An Open Approach towards the benchmarking of table structure recognition systems", Proceedings of DAS’10, pp. 113-120, June 9-11, 2010, Boston, MA, USA | ||

Latest revision as of 18:01, 27 January 2011

Datasets -> Datasets List -> Current Page

|

Contents

Description

The aim of this task is to benchmark different table spotting and table structure recognition algorithms. In the past, several algorithms have been developed for table recognition tasks and evaluated on different datasets [1-4]. Additionally different benchmarking measures have been used in the past by different researchers to evaluate the efficiency of their algorithms.

This task comprises 427 images from the publicly available UNLV dataset. We provide the table structure groundtruth and word bounding box results for these document images. These images from the UNLV dataset along with the ground truth XML files and word bounding box OCR files can be used to generate pixel accurate ground truth image files as described in [5]. Appropriate tools to generate these ground truth image files are provided below.

The competing algorithms need to produce the output in the XML format similar to the sample ground truth. Five example output from our t-recs algorithm are included in the set of samples provided for reference. The output from these algorithms are then used to produce result image files which are then compared by the evaluation framework to produce following benchmarking measures:

- Correct Detections

- Partial Detections

- Over Segmentation

- Under Segmentation

- Missed

- False Positives

at the following levels of abstraction:

- Table

- Rows

- Columns

- Cells

- Row spanning cells

- Column spanning cells

- Row/column spanning cells.

The evaluation software provided below (segment_evaluation.sh, part of the T-truth package) expects ground truth images in the "evaluation/gt" folder and result images in the "evaluation/result" folder. The evaluation algorithm produces a file with the extension ".out" in the evaluation directory for each level of abstraction (table, row, column etc) with semicolon separated benchmarking measures in the following order:

- gt_img

- result_img

- GroundTruthComponents

- SegmentationComponents

- Number of OverSegmentations

- Number of UnderSegmentations

- Number of FalseAlarms

- OverSegmentedComponents

- UnderSegmentedComponents

- False Alarms

- Missed

- Correct

- Partial Matches

These benchmarking measures are described in [5].

Related Dataset

Related Ground Truth Data

Related Software

- T-truth Software and Samples (4.5 Mb)

References

- T. Kieninger and A. Dengel, "Applying the T-RECS table recognition system to the business letter domain", Proceedings of the 6th IAPR International Conference on Document Analysis and Recognition, IEEE CS, pp. 518-522, 2001

- J. Hu, R. Kashi, D. Lopresti, and G. Wilfong, "Medium-independent table detection", Proceedings of the 8th SPIE Conference on Document Recognition and Retrieval, pp. 291-302, 2000

- S. Mandal, S. Chowdhury, A. Das, and B. Chanda, "A simple and effective table detection system from document images", International Journal on Document Analysis and Recognition, Vol. 8, Num. 2-3, pp. 172-182, 2006

- B. Gatos, D. Danatsas, I. Pratikakis, and S. J. Perantonis, "Automatic table detection in document images", Proceedings of the International Conference on Advances in Pattern Recognition, pp. 612-621, 2005

- Asif Shahab, Faisal Shafait, Thomas Kieninger and Andreas Dengel, "An Open Approach towards the benchmarking of table structure recognition systems", Proceedings of DAS’10, pp. 113-120, June 9-11, 2010, Boston, MA, USA

This page is editable only by TC11 Officers .