Difference between revisions of "MSRA Text Detection 500 Database (MSRA-TD500)"

(Created page with "Datasets -> Datasets List -> Current Page {| style="width: 100%" |- | align="right" | {| |- | '''Created: '''2012-10-26 |- | {{Last updated}} |} |} =Contact Author=…") |

(→Text Detection in Natural Images) |

||

| (6 intermediate revisions by the same user not shown) | |||

| Line 23: | Line 23: | ||

=Keywords= | =Keywords= | ||

| − | + | Text Detection, Natural Image, Arbitrary Orientation | |

=Description= | =Description= | ||

| − | [[Image:MSRA-TD500 Example.jpg| | + | [[Image:MSRA-TD500 Example.jpg|600px|thumb|right| Figure 1. Typical images from MSRA-TD500. Notice the red rectangles. They indicate the texts within them are labelled as difficult (due to blur or occlusion).]] |

| − | MSRA Text Detection 500 Database (MSRA-TD500) is collected and released publicly as a benchmark to evaluate text detection algorithms, for the purpose of tracking the recent progresses in the field of text detection in natural images, especially the advances in detecting texts of arbitrary orientations. | + | The MSRA Text Detection 500 Database (MSRA-TD500) is collected and released publicly as a benchmark to evaluate text detection algorithms, for the purpose of tracking the recent progresses in the field of text detection in natural images, especially the advances in detecting texts of arbitrary orientations. |

| − | MSRA Text Detection 500 Database (MSRA-TD500) contains 500 natural images, which are taken from indoor (office and mall) and outdoor (street) scenes using a | + | The MSRA Text Detection 500 Database (MSRA-TD500) contains 500 natural images, which are taken from indoor (office and mall) and outdoor (street) scenes using a pocket camera. The indoor images are mainly signs, doorplates and caution plates while the outdoor images are mostly guide boards and billboards in complex background. The resolutions of the images vary from 1296x864 to 1920x1280. |

| − | |||

| − | The dataset is divided into two parts: training set and test set. The training set contains 300 images randomly selected from the original dataset and the | + | The dataset is challenging because of both the diversity of the texts and the complexity of the background in the images. The text may be in different languages (Chinese, English or mixture of both), fonts, sizes, colors and orientations. The background may contain vegetation (e.g. trees and bushes) and repeated patterns (e.g. windows and bricks), which are not so distinguishable from text. |

| + | |||

| + | The dataset is divided into two parts: training set and test set. The training set contains 300 images randomly selected from the original dataset and the remaining 200 images constitute the test set. All the images in this dataset are fully annotated. The basic unit in this dataset is text line (see Figure 1) rather than word, which is used in the ICDAR datasets, because it is hard to partition Chinese text lines into individual words based on their spacing; even for English text lines, it is non-trivial to perform word partition without high level information. | ||

=Metadata and Ground Truth Data= | =Metadata and Ground Truth Data= | ||

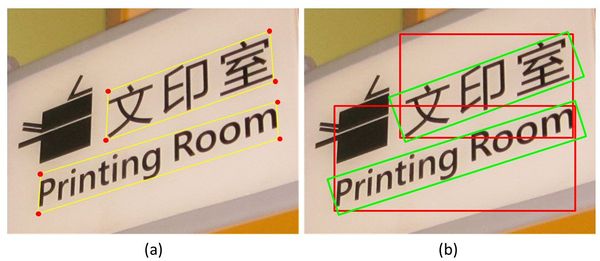

| − | [[Image:MSRA-TD500 GT Sample1.jpg| | + | [[Image:MSRA-TD500 GT Sample1.jpg|600px|thumb|right| Figure 2. Ground truth generation. (a) Human annotations. The annotators are required to locate and bound each text line using a four-vertex polygon (red dots and yellow lines). (b) Ground truth rectangles (green). The ground truth rectangle is generated automatically by fitting a minimum area rectangle using the polygon.]] |

The procedure of ground truth generation is shown in Figure 2. While current evaluation methods for text detection are designed for horizontal texts only, we proposed a new evaluation protocol (see [[#References|[1]]] for details). Minimum area rectangles are used in our protocol because they (green rectangles in Figure 2 (b)) are much tighter than axis-aligned rectangles (red rectangles in Figure 2 (b)). | The procedure of ground truth generation is shown in Figure 2. While current evaluation methods for text detection are designed for horizontal texts only, we proposed a new evaluation protocol (see [[#References|[1]]] for details). Minimum area rectangles are used in our protocol because they (green rectangles in Figure 2 (b)) are much tighter than axis-aligned rectangles (red rectangles in Figure 2 (b)). | ||

| − | In particular, to accommodate difficult | + | In particular, to accommodate difficult text (too small, occluded, blurry, or truncated) that is hard for text detection algorithms, each text instance considered to be difficult is given an additional “difficult” label (note the red rectangles in Figure 1). Detection misses of such difficult texts will not be punished. |

==Format of the ground truth files== | ==Format of the ground truth files== | ||

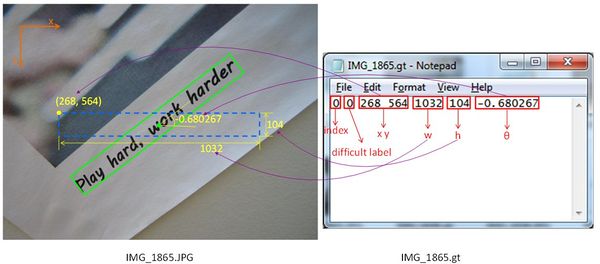

| − | [[Image:MSRA-TD500 GT Sample2.jpg| | + | [[Image:MSRA-TD500 GT Sample2.jpg|600px|thumb|right| Figure 3. Illustration of the ground truth file format. The index field can be ignored. The difficult label is “1” if the text is labeled as “difficult” and “0” otherwise.]] |

Each image in the database corresponds to a ground truth file, in which each line records the information of one text. The format of the ground truth files is illustrated in Figure 3. | Each image in the database corresponds to a ground truth file, in which each line records the information of one text. The format of the ground truth files is illustrated in Figure 3. | ||

---- | ---- | ||

| Line 48: | Line 49: | ||

=Related Tasks= | =Related Tasks= | ||

==Text Detection in Natural Images== | ==Text Detection in Natural Images== | ||

| − | 'Purpose:' | + | ''Purpose:'' To localize the positions and estimate the extents of texts in natural images |

| − | 'Importance:' Understanding text information embedded in natural scene is of great importance, as it has a large number of applications, for instance, image understanding, image and video search, geo-locating, and navigation | + | |

| − | 'Evaluation Protocol:' The evaluation protocol is stated in detail in [[#References|[1]]]. | + | ''Importance:'' Understanding text information embedded in natural scene is of great importance, as it has a large number of applications, for instance, image understanding, image and video search, geo-locating, and navigation |

| + | |||

| + | ''Evaluation Protocol:'' The evaluation protocol is stated in detail in [[#References|[1]]]. | ||

=References= | =References= | ||

| Line 57: | Line 60: | ||

=Download= | =Download= | ||

==Version 1.0== | ==Version 1.0== | ||

| − | * [http://www.iapr-tc11.org/dataset/MSRA-TD500/MSRA-TD500.zip The complete MSRA-TD500 dataset along with ground truth files] ( | + | * [http://www.iapr-tc11.org/dataset/MSRA-TD500/MSRA-TD500.zip The complete MSRA-TD500 dataset along with ground truth files] (96 MB) |

---- | ---- | ||

This page is editable only by [[IAPR-TC11:Reading_Systems#TC11_Officers|TC11 Officers ]]. | This page is editable only by [[IAPR-TC11:Reading_Systems#TC11_Officers|TC11 Officers ]]. | ||

Latest revision as of 16:40, 29 October 2012

Datasets -> Datasets List -> Current Page

|

Contents

Contact Author

Cong Yao Huazhong University of Science and Technology Email: yaocong2010@gmail.com

Current Version

1.0

Keywords

Text Detection, Natural Image, Arbitrary Orientation

Description

The MSRA Text Detection 500 Database (MSRA-TD500) is collected and released publicly as a benchmark to evaluate text detection algorithms, for the purpose of tracking the recent progresses in the field of text detection in natural images, especially the advances in detecting texts of arbitrary orientations.

The MSRA Text Detection 500 Database (MSRA-TD500) contains 500 natural images, which are taken from indoor (office and mall) and outdoor (street) scenes using a pocket camera. The indoor images are mainly signs, doorplates and caution plates while the outdoor images are mostly guide boards and billboards in complex background. The resolutions of the images vary from 1296x864 to 1920x1280.

The dataset is challenging because of both the diversity of the texts and the complexity of the background in the images. The text may be in different languages (Chinese, English or mixture of both), fonts, sizes, colors and orientations. The background may contain vegetation (e.g. trees and bushes) and repeated patterns (e.g. windows and bricks), which are not so distinguishable from text.

The dataset is divided into two parts: training set and test set. The training set contains 300 images randomly selected from the original dataset and the remaining 200 images constitute the test set. All the images in this dataset are fully annotated. The basic unit in this dataset is text line (see Figure 1) rather than word, which is used in the ICDAR datasets, because it is hard to partition Chinese text lines into individual words based on their spacing; even for English text lines, it is non-trivial to perform word partition without high level information.

Metadata and Ground Truth Data

The procedure of ground truth generation is shown in Figure 2. While current evaluation methods for text detection are designed for horizontal texts only, we proposed a new evaluation protocol (see [1] for details). Minimum area rectangles are used in our protocol because they (green rectangles in Figure 2 (b)) are much tighter than axis-aligned rectangles (red rectangles in Figure 2 (b)).

In particular, to accommodate difficult text (too small, occluded, blurry, or truncated) that is hard for text detection algorithms, each text instance considered to be difficult is given an additional “difficult” label (note the red rectangles in Figure 1). Detection misses of such difficult texts will not be punished.

Format of the ground truth files

Each image in the database corresponds to a ground truth file, in which each line records the information of one text. The format of the ground truth files is illustrated in Figure 3.

Related Tasks

Text Detection in Natural Images

Purpose: To localize the positions and estimate the extents of texts in natural images

Importance: Understanding text information embedded in natural scene is of great importance, as it has a large number of applications, for instance, image understanding, image and video search, geo-locating, and navigation

Evaluation Protocol: The evaluation protocol is stated in detail in [1].

References

- C. Yao, X. Bai, W. Liu, Y. Ma and Z. Tu. Detecting Texts of Arbitrary Orientations in Natural Images. CVPR 2012 (PDF).

Download

Version 1.0

This page is editable only by TC11 Officers .