IBN SINA: A database for research on processing and understanding of Arabic manuscripts images

Datasets -> Datasets List -> Current Page

|

Contents

Contact Author

Prof Mohamed Cheriet Synchromedia Laboratory ETS, Montréal, (QC) Canada H3C 1K3 E-mail: mohamed.cheriet@etsmtl.ca Tel: +1(514)396-8972 Fax: +1(514)396-8595 |

Keywords

Arabic language, shape recognition, skeletonization, character recognition

Description

The database is built on a manuscript-image provided by the Institute of Islamic Studies (IIS), McGill University, Montreal. The author of the manuscript was Sayf al-Din Abu al-Hasan Ali ibn Abi Ali ibn Muhammad al-Amidi (d. 1243A.D.). The title of the manuscript is Kitab Kashf al-tamwihat sharh al-Tanbihat (i.e., Commentary on Ibn Sina's al-Isharat fi wa-al-tanbihat). The attention paid to this work was particularly intense in the period between the late twelfth century to the first half of the fourteenth century, when more than a dozen comprehensive commentaries were composed. Kashf al-tamwihat fi sharh al-Tanbihat, one of the early commentaries written on al-Isharat wa-al-tanbihat, is an unpublished commentary which still awaits critical edition by scholars.

The selected dataset is obtained form 51 folios and corresponds to 20722 extracted shapes (connected components) of Arabic script extracted from the historical manuscript.

The shapes are extracted from the enhanced and restored document images of the manuscript [1, 2] and represent blobs of ink in the original manuscript. In order to extract the shapes, a complete binarization process is applied to the input images. It consists of an enhancement / restoration step followed by the binarization step. For the enhancement step multi-level classifiers including the Stroke Map, the Edge Profile and the Estimated background have been used. The definition of these classifiers can be found in reference [3]. For the skeletonization, a thinning process has been used, followed by a correction step to discover missed branch points.

Version 1.0

The first version of the dataset comprises the feature vectors of the 20722 shapes (sub-words extracted as connected components). The feature vector consists of 92 features. The features can be divided in two parts: (1) 8 global features and (2) 84 skeleton-based features. The second part also can be divided in two sub-parts: (a) topological features based on the relation to the branch/end/singular points on the skeleton and (b) geometrical features related to the orientation and position of sub-strokes that comprise the connected component or shape under study. The feature vector is regularized in terms of its length. The details can be found in section 4 of the paper [1].

All non-normalised feature vectors are provided in a single space delimited text file, where each row corresponds to the feature vector of a single connected component.

Version 2.0

The extended version of the dataset includes the corresponding image data of the shapes. The dataset comprises a series of MatLAB files, one for each folio of the manuscript, containing a structure with all the available information icluding the images and features of each sub-word. The metadata provided include the feature vector calculated from the skeleton of shapes [1]. Detailed information about the structure of the files is provided in the guide.

Two editions of the dataset v2.0 are provided for greater flexibility: one containing just the binarized images of the shapes (small file) and another containing both the original color images and the binarized ones (large file). The rest of the information in the both files is identical.

Related Ground Truth Data

Version 1.0

For each shape in the dataset, 15 binary labels that correspond to 15 problems are provided. For each problem, the label specifies whether the string of that shape contains the associate Arabic letter of that problem or not. For example, in Latin language for the sake of font simplicity, if the string is “ab”, for Problem of letter “a”, the label will be 1, and for problem of letter “c” the label will be -1.

Only 15 letters are considered here that have at least 1000 positive samples each in the dataset. The 15 letters are:

- Ein: abbreviated as EU,

- Aleph: abbreviated as aL,

- Be: Abbreviated as bL,

- Dal: Abbreviated as dL,

- Fa: Abbreviated as fL,

- Ha: Abbreviated as hL,

- Kaf: Abbreviated as kL,

- Lam: Abbreviated as lL,

- Mim: Abbreviated as mL,

- Nun: Abbreviated as nL,

- Ghain: Abbreviated as qL,

- Ra: Abbreviated as rL,

- Ta: Abbreviated as tL,

- Waw: Abbreviated as vL,

- Ya: Abbreviated as yL

For abbreviation of the problem names, Fingilish encoding is used which corresponds each Arabic letter to an ASCII character. Please see the report for the details. Ground Truth Specification: The ground truth data are specified as a single 20722 X 15 matrix containing the labels of the 15 problems for all shapes in the dataset. They are provided as a single space delimited text file.

Version 2.0

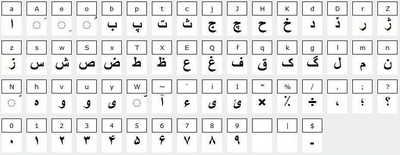

The label of each letter-block (equivalent to "shape" or "connected component") was manually assigned and verified. Each label is a sequence of latin characters corresponding to the transliteration of the letter-block. For this purpose, Finglish transliteration is used (see the table on the right). These are the same labels as used for version 1.0.

Unrecognizable letter-blocks are labelled as 'NaN'.

Labelling information is provided as a field within the connected component structure (MatLAB file). See the guide provided for further information on the structure of the provided files, and examples of use.

Related Tasks

References

- Reza Farrahi Moghaddam, Mohamed Cheriet, Mathias M. Adankon, Kostyantyn Filonenko, and Robert Wisnovsky, “IBN SINA: A database for research on processing and understanding of Arabic manuscripts images”, Proceedings of DAS’10, June 9-11, 2010, Boston, MA, USA

- Reza Farrahi Moghaddam and Mohamed Cheriet, “Application of Multi-level Classifiers and Clustering for Automatic Word-spotting in Historical Document Images”, ICDAR’09, pp 511-515, July 26-29, Barcelona, Spain.

- Reza Farrahi Moghaddam and Mohamed Cheriet, “RSLDI: Restoration of single-sided low-quality document images”, Pattern Recognition, 42, 3355-3364, 2009.

Submitted Files

Version 1.0

Version 2.0

- The full dataset including just binarized images (17 Mb).

- The full dataset including both binarized and color images (170 Mb).

- A guide to the Dataset (0.5 Mb).

This page is editable only by TC11 Officers .